UM-Flint student uses technology to build a better future for herself and others

The old science fiction trope of futuristic humans working alongside robots – before the mechanical menaces eventually turn on their creators – is as old as the genre itself.

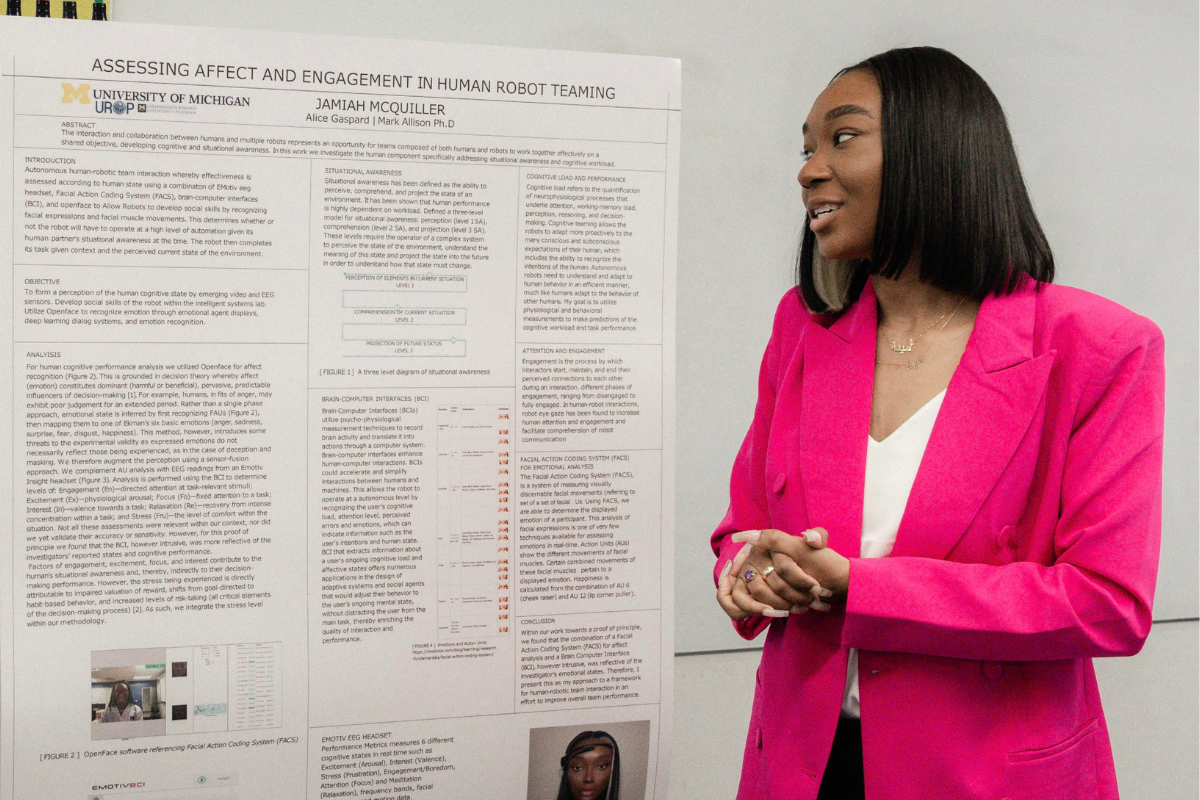

However, for Jamiah McQuiller, minoring in computer science isn't about whether she can build her own personal R2-D2 to pal around with, or develop the next smart whatever. Instead, it is providing an avenue to something even more important: inclusivity.

"I actually got into the technology field to represent the historically excluded," said McQuiller. "Being underrepresented in any space is intimidating, but it's especially damaging in tech because we're now creating digital spaces that can or cannot be inclusive.

"I want to be involved in helping create inclusive spaces around race, gender and sexual orientation. In the area of tech, I want to say, 'We are equally belonging and our presence here is purposeful too.'"

To that end, the junior cybersecurity major from Port Huron, enrolled in the University of Michigan-Flint's Undergraduate Research Opportunity Program, which is designed to support collaborations between undergraduate students and faculty researchers.

UROP allows students to earn paid, or volunteer, hands-on research experience working alongside faculty on cutting-edge projects. Additionally, faculty are afforded the opportunity to mentor enthusiastic and talented students. Because of the diverse nature of the research conducted at UM-Flint, undergraduate students at any level and from any academic discipline can participate in UROP.

McQuiller, who is expecting to graduate in 2025, is using her UROP experience to learn how computer science tools can improve the ways humans can interact alongside robots and in virtual spaces. "I enrolled in UROP because I aspire to gain additional experience and exposure, which will be beneficial for my job search upon graduation," she said.

Teamwork with Robots

Early this year, McQuiller aided Mark Allison, associate professor of computer science, who is part of a large National Science Foundation-funded research project looking into teamwork and robotics.

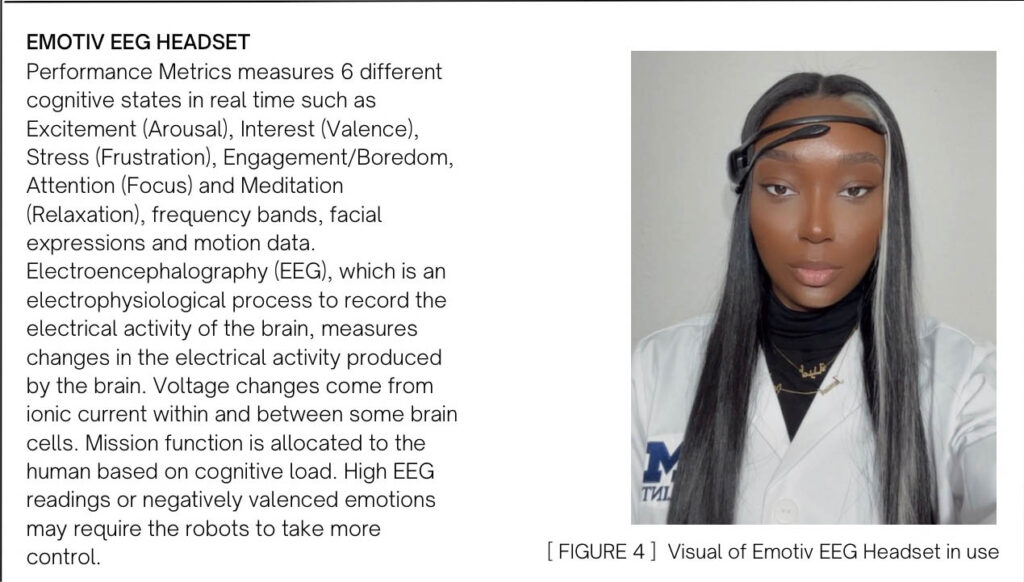

For her part, McQuiller helped to analyze the software that would enable a robot to detect how a person may be feeling using an array of state-of-the-art data collection tools, including an Emotiv EEG headset, Facial Action Coding System, brain-computer interface, and OpenFace.

Some of these tools fall in the realm of Autoencoders, which are a specific kind of artificial neural networks. From a machine learning perspective, they are designed to learn efficient data codings of massive batches of data and represent them in useful ways by training the network to ignore signal 'noise.' An easier way to think of it is that some of these tools can collect all of this specialized data about a person that can be useful to understand how they're feeling, but really only certain parts of that are useful for the task. Our brains do this every day – if we didn't ignore big chunks of the data we receive through our senses, our heads would explode.

McQuiller said, "Autoencoders find a way for humans and machines to work together in a healthy way. This is a crucial part of the puzzle." McQuiller also helped analyze how these tools can help robots adjust to the fluctuations in mood and stress of the humans around them.

Some may know of the work by famed researcher Paul Ekman, who theorized that some basic human emotions are innate and shared by everyone and that they are accompanied across cultures by universal facial expressions. With that in mind, McQuiller used FACS and OpenFace's affect recognition software to distinguish the six basic emotions of anger, sadness, surprise, fear, disgust and happiness as, according to researchers, "they show up the same way in the muscles of our face pretty reliably." However, emotional baselines or some cultural differences could skew those results.

After analysis, they found that a combination of FACS and Emotiv tools pulling in human emotional data and BCI filtering it in a usable way could indeed reflect emotional states, a step in improving team performance between robots and humans. Robots currently on the market today have to move at a painfully slow rate when humans are present. That's just unacceptable for manufacturers today. This project seeks to solve those bottlenecks so that robots can have a type of situational awareness and adjust to the variability in mood and capacity of the humans around them.

We humans do this innately. In order to learn how to program this science into the autoencoder programs, Allison's team had to get a deep understanding of how this actually works in humans. "This experience provided me with a fresh perspective on emotions," McQuiller said. "Acquainting yourself with these principles grants you an opportunity to more effectively evaluate someone's emotional state and collaborate with them."

VR and Heart Models

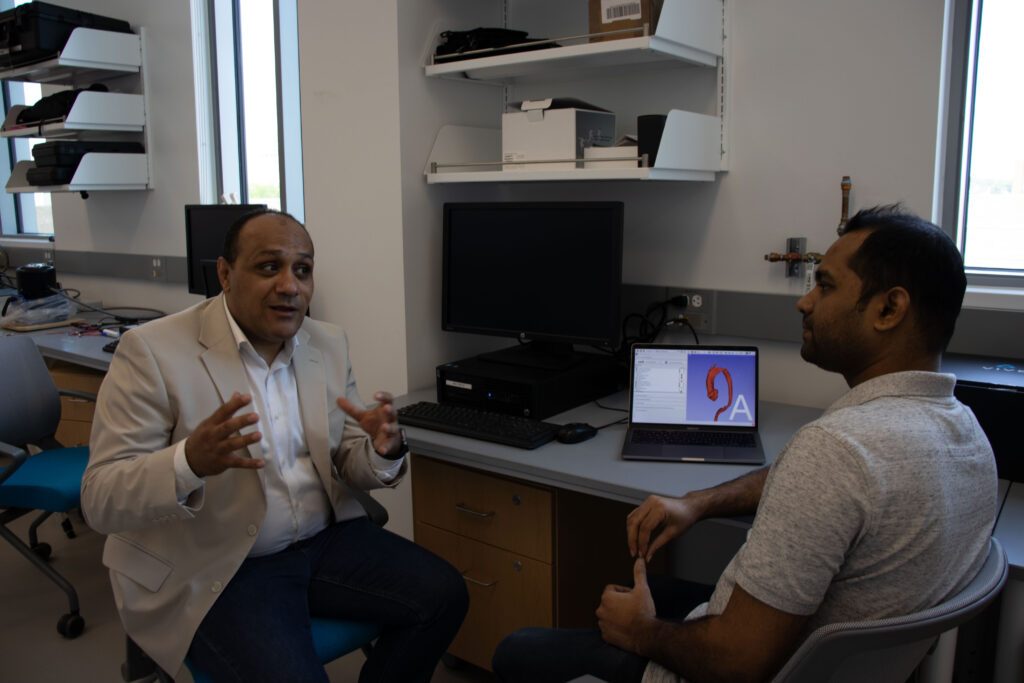

Currently, McQuiller is working as a research assistant in UM-Flint's Computational Medicine and Biomechanics Lab, under the supervision of Yasser Aboelkassem, assistant professor of digital manufacturing technology. Her role in Aboelkassem's lab is to develop a "Patient-Specific VR" software that can be used to improve the way cardiovascular diseases are visualized, analyzed and diagnosed.

Through this new venture, the hope is that McQuiller's software tool can be integrated with VR technology to visualize and analyze 3D cardiac medical images. The process involves taking standardized MRI data to build those depictions from typically seen imagery and turning those into 3D models. McQuiller then takes those models and inserts them into a virtual surgery center she has created.

"Ultimately, our goal is to empower physicians to detect abnormalities early in patients, thereby improving medical outcomes," said Aboelkassem.

The developed cardiac VR platform will be patient-specific and expected to help during the diagnostic phases for patients with cardiovascular diseases. Currently, a radiologist or surgeon has to imagine the aortic structure by looking at the individual layers, slices of the whole. This technology will enable them to view a holistic image of the aorta, see where damage is located, and modify their treatment plan with greater confidence.

Taking it a step further, the team is integrating real-time data with 4D Magnetic Resonance Images, which helps them to analyze blood flow motions inside the arterial network.

For McQuiller, UM-Flint isn't just a place to learn; it's a place to make a difference for herself and for others. "This university is a springboard to a promising future. Through my research in UROP and at the CIT, I aspire to make a meaningful impact by enabling doctor's offices to swiftly detect vascular damage and thereby potentially saving the lives of many."

Related Posts

No related photos.

Rob McCullough

Rob McCullough is the communications specialist for the College of Innovation & Technology. He can be reached at [email protected].